Generative A-Eye #14 - 8th Oct,2024

A (more or less) daily newsletter featuring brief summaries of the latest papers related to AI-based human image synthesis, or to research related to this topic.

The Tuesday Arxiv flood didn’t deliver any big winners today, so I gave AI-based human synthesis a break and wrote a piece about Apple’s work on gender assignment in NLP-based translation. You can see it here.

Today’s selections:

GS-VTON: Controllable 3D Virtual Try-on with Gaussian Splatting

It was only a matter of time before the very well-funded fashion synthesis research sector got round to Gaussian Splatting. I think many more forays into clothing-based GSplat are round the corner,

‘[An] image-prompted 3D VTON method (dubbed GS-VTON) which, by leveraging 3D Gaussian Splatting (3DGS) as the 3D representation, enables the transfer of pre-trained knowledge from 2D VTON models to 3D while improving cross-view consistency. (1) Specifically, we propose a personalized diffusion model that utilizes low-rank adaptation (LoRA) fine-tuning to incorporate personalized information into pre-trained 2D VTON models’

http://export.arxiv.org/abs/2410.05259

https://yukangcao.github.io/GS-VTON/

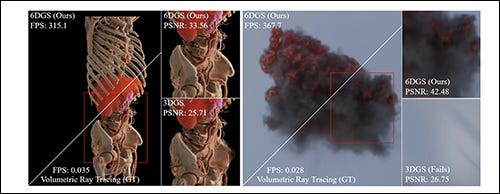

6DGS: Enhanced Direction-Aware Gaussian Splatting for Volumetric Rendering

An incremental GSplat innovation, but anything that improves quality is welcome.

‘[We] revisit 6D Gaussians and introduce 6D Gaussian Splatting (6DGS), which enhances color and opacity representations and leverages the additional directional information in the 6D space for optimized Gaussian control’

http://export.arxiv.org/abs/2410.04974

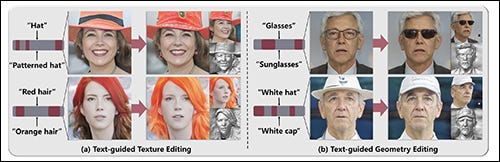

Revealing Directions for Text-guided 3D Face Editing

There is a slowly-gathering trend to add diffusion capabilities to older neural rendering methods such as NeRF. Here, Generative Adversarial Networks are augmented by an LDM - though the results are not entirely convincing.

‘[We] explore the possibility of introducing the strength of diffusion model into 3D-aware GANs. In this paper, we present Face Clan, a fast and text-general approach for generating and manipulating 3D faces based on arbitrary attribute descriptions.’

https://arxiv.org/abs/2410.04965

OmniBooth: Learning Latent Control for Image Synthesis with Multi-modal Instruction

Some of the mask-based examples in this sprawling project are very interesting, though the sources are clearly extrapolated from photos.

‘[An] image generation framework that enables spatial control with instance-level multi-modal customization. For all instances, the multimodal instruction can be described through text prompts or image references. Given a set of user-defined masks and associated text or image guidance, our objective is to generate an image, where multiple objects are positioned at specified coordinates and their attributes are precisely aligned with the corresponding guidance. This approach significantly expands the scope of text-to-image generation, and elevates it to a more versatile and practical dimension in controllability.’

https://len-li.github.io/omnibooth-web/

http://arxiv.org/abs/2410.04932

DiffusionFake: Enhancing Generalization in Deepfake Detection via Guided Stable Diffusion

Another deepfake detector using ancient and outmoded datasets such as Celeb-DF, presumably to preserver like-on-like results standards with much older papers. Nonetheless, the approach is novel.

‘Deepfake images inherently contain information from both source and target identities, while genuine faces maintain a consistent identity. Building upon this insight, we introduce DiffusionFake, a novel plug-and-play framework that reverses the generative process of face forgeries to enhance the generalization of detection models.’

https://arxiv.org/pdf/2410.04372

AutoLoRA: AutoGuidance Meets Low-Rank Adaptation for Diffusion Models

Some notable improvements in accuracy and quality are on display here.

‘[We’ introduce AutoLoRA, a novel guidance technique for diffusion models fine-tuned with the LoRA approach. Inspired by other guidance techniques, AutoLoRA searches for a trade-off between consistency in the domain represented by LoRA weights and sample diversity from the base conditional diffusion model. Moreover, we show that incorporating classifier-free guidance for both LoRA fine-tuned and base models leads to generating samples with higher diversity and better quality.’

http://export.arxiv.org/abs/2410.03941

My domain expertise is in AI image synthesis, and I’m the former science content head at Metaphysic.ai. I’m an occasional machine learning practitioner, and an educator. I’m also a native Brit, currently resident in Bucharest.

If you want to see more extensive examples of my writing on research, as well as some epic features (many of which hit big at Hacker News and garnered significant traffic), check out my portfolio website at https://martinanderson.ai.