Generative A-Eye #17 - 15th Oct,2024

A (more or less) daily newsletter featuring brief summaries of the latest papers related to AI-based human image synthesis, or to research related to this topic.

There may be no updates for a while after this, as we are moving house.

The research community continues to fixate on Tuesday, with around 250 papers going live in Computer Vision today, versus less than 100 yesterday.

Here are the ones that caught my eye.

Tex4D: Zero-shot 4D Scene Texturing with Video Diffusion Models

This offering institutionalizes CGI as the driving force, effectively turning the neural element into an AI-aided ray-tracer. Nonetheless, there’s some interesting innovation here in regard to persistent memory for depicted content.

I (almost) take this release as a representative admission of defeat from the research sector, in regard to its prior hopes of truly taming the latent space. At the moment, this kind to vid-2-vid approach offers the only viable method for narrative continuity in the genVid space.

'3D meshes are widely used in computer vision and graphics for their efficiency in animation and minimal memory use, playing a crucial role in movies, games, AR, and VR. However, creating temporally consistent and realistic textures for mesh sequences remains labor-intensive for professional artists. On the other hand, while video diffusion models excel at text-driven video generation, they often lack 3D geometry awareness and struggle with achieving multi-view consistent texturing for 3D meshes. In this work, we present Tex4D, a zero-shot approach that integrates inherent 3D geometry knowledge from mesh sequences with the expressiveness of video diffusion models to produce multi-view and temporally consistent 4D textures'

http://export.arxiv.org/abs/2410.10821

https://tex4d.github.io/

Sitcom-Crafter: A Plot-Driven Human Motion Generation System in 3D Scenes

Though the abstract is riffing a little on AI Seinfeld for attention, this is an interesting motion and motivation system for neural characters.

'[A] comprehensive and extendable system for human motion generation in 3D space, which can be guided by extensive plot contexts to enhance workflow efficiency for anime and game designers.'

https://windvchen.github.io/Sitcom-Crafter/

http://export.arxiv.org/abs/2410.10790

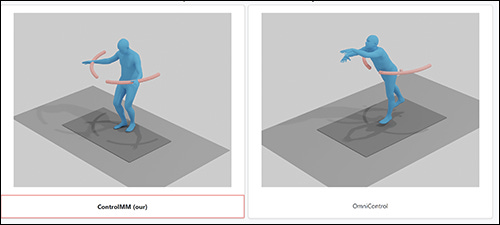

ControlMM: Controllable Masked Motion Generation

The project page for this LDM-based movement system features some interesting and promising examples of fidelity to denoted paths. Anything that improves instrumentality for diffusion-based generation is welcome, though the sheer number of similar such projects over the last 18 months would suggest that the target rendering environment (LDMs) is more capable and ready for this kind of framework than it probably is.

'Recent advances in motion diffusion models have enabled spatially controllable text-to-motion generation. However, despite achieving acceptable control precision, these models suffer from generation speed and fidelity limitations. To address these challenges, we propose ControlMM, a novel approach incorporating spatial control signals into the generative masked motion model. ControlMM achieves real-time, high-fidelity, and high-precision controllable motion generation simultaneously.'

http://export.arxiv.org/abs/2410.10780

https://exitudio.github.io/ControlMM-page/

4-LEGS: 4D Language Embedded Gaussian Splatting

Despite the name and the initial examples of animal rendering, this 3DGS-based system offers general effects for any subject matter.

'In our work, we are interested in connecting language with a dynamic modeling of the world. We show how to lift spatio-temporal features to a 4D representation based on 3D Gaussian Splatting. %, \gal{while introducing a feature-proximity attention mechanism that allows for neighboring features in 3D space to interact}. This enables an interactive interface where the user can spatiotemporally localize events in the video from text prompts. We demonstrate our system on public 3D video datasets of people and animals performing various actions.'

https://tau-vailab.github.io/4-LEGS/

http://export.arxiv.org/abs/2410.10719

TALK-Act: Enhance Textural-Awareness for 2D Speaking Avatar Reenactment with Diffusion Model

Avatar projects are one of the hottest pursuits in the literature in this period. However, talking heads are a cheap target for this kind of ControlNet-driven initiative; the limited motion of the people in typical desk-bound clips hardly reveals the many limitations that diffusion models currently face in generalizing to extreme views, or maintaining temporal/ID consistency.

'[We] propose the Motion-Enhanced Textural-Aware ModeLing for SpeaKing Avatar Reenactment (TALK-Act) framework, which enables high-fidelity avatar reenactment from only short footage of monocular video. Our key idea is to enhance the textural awareness with explicit motion guidance in diffusion modeling.'

https://guanjz20.github.io/projects/TALK-Act/

http://export.arxiv.org/abs/2410.10696

Efficient High-Resolution Image Synthesis with Linear Diffusion Transformer

NVIDIA offers native high-res diffusion generation, without the ‘repeating’ and other aberrations that occur when models trained on a certain resolution are asked to produce a higher resolution.

Though it has not made much of a splash yet, Sana’s ability to generate HQ images in one second on a conventional laptop are not easily dismissed.

'We introduce Sana, a text-to-image framework that can efficiently generate images up to 4096 × 4096 resolution. Sana can synthesize high-resolution, high-quality images with strong text-image alignment at a remarkably fast speed, deployable on laptop GPU.'

https://nvlabs.github.io/Sana/

http://export.arxiv.org/abs/2410.10629

Animate-X: Universal Character Image Animation with Enhanced Motion Representation

ControlNet’s skeletal pose facility surfaces again, in a project page that has some interesting sample videos (check out the ‘rabbit/woman’ from prior/rival system Unianimate in the Comparison with SOTA methods section).

This is the first paper I have seen that uses the ControlNet variant ControlNeXt.

'Animate-X, a universal animation framework based on LDM for various character types (collectively named X), including anthropomorphic characters. To enhance motion representation, we introduce the Pose Indicator, which captures comprehensive motion pattern from the driving video through both implicit and explicit manner. The former leverages CLIP visual features of a driving video to extract its gist of motion, like the overall movement pattern and temporal relations among motions, while the latter strengthens the generalization of LDM by simulating possible inputs in advance that may arise during inference.'

https://lucaria-academy.github.io/Animate-X/

https://arxiv.org/abs/2410.10306

My domain expertise is in AI image synthesis, and I’m the former science content head at Metaphysic.ai. I’m an occasional machine learning practitioner, and an educator. I’m also a native Brit, currently resident in Bucharest.

If you want to see more extensive examples of my writing on research, as well as some epic features (many of which hit big at Hacker News and garnered significant traffic), check out my portfolio website at https://martinanderson.ai.