Generative A-Eye #8 - 26th Sept,2024

A (more or less) daily newsletter featuring brief summaries of the latest papers related to AI-based human image synthesis, or to research related to this topic.

Today’s newsletter is a catch-up that includes yesterday’s scene entries that caught my eye.

The first and most interesting of them, I have already written up in an article at Unite.ai:

A New System for Temporally Consistent Stable Diffusion Video Characters

‘A new initiative from the Alibaba Group offers one of the best methods I have seen for generating full-body human avatars from a Stable Diffusion-based foundation model.

Titled MIMO (MIMicking with Object Interactions), the system uses a range of popular technologies and modules, including CGI-based human models and AnimateDiff, to enable temporally consistent character replacement in videos – or else to drive a character with a user-defined skeletal pose.’

https://menyifang.github.io/projects/MIMO/index.html

http://export.arxiv.org/abs/2409.16160

And my write-up: https://www.unite.ai/a-new-system-for-temporally-consistent-stable-diffusion-video-characters/

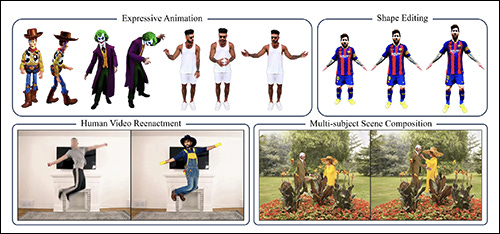

DreamWaltz-G: Expressive 3D Gaussian Avatars from Skeleton-Guided 2D Diffusion

An interesting GSplat system hindered, as so many similar papers are lately, by cutesy examples. This could be because of the AI backlash over deepfakes in 2024, or because the system is not that great at photorealistic output.

'[A] novel learning framework for animatable 3D avatar generation from text. The core of this framework lies in Skeleton-guided Score Distillation and Hybrid 3D Gaussian Avatar representation. Specifically, the proposed skeleton-guided score distillation integrates skeleton controls from 3D human templates into 2D diffusion models, enhancing the consistency of SDS supervision in terms of view and human pose. This facilitates the generation of high-quality avatars, mitigating issues such as multiple faces, extra limbs, and blurring.'

https://yukun-huang.github.io/DreamWaltz-G/

https://arxiv.org/abs/2409.17145

Single Image, Any Face: Generalisable 3D Face Generation

A latent diffusion-based face generator that has the courage to pit itself, in tests, against GAN-based methods (which really specialize in this sort of thing).

'[A] novel model, Gen3D-Face, which generates 3D human faces with unconstrained single image input within a multi-view consistent diffusion framework. Given a specific input image, our model first produces multi-view images, followed by neural surface construction. To incorporate face geometry information in a generalisable manner, we utilise input-conditioned mesh estimation instead of ground-truth mesh along with synthetic multi-view training data.'

http://export.arxiv.org/abs/2409.16990

Towards Unified 3D Hair Reconstruction from Single-View Portraits

The second Gaussian Splat-based hair synthesis method to crop up this week (read about the previous one in newsletter #7.

'[A] novel strategy to enable single-view 3D reconstruction for a variety of hair types via a unified pipeline. To achieve this, we first collect a large-scale synthetic multi-view hair dataset SynMvHair with diverse 3D hair in both braided and un-braided styles, and learn two diffusion priors specialized on hair. Then we optimize 3D Gaussian-based hair from the priors with two specially designed modules, i.e. view-wise and pixel-wise Gaussian refinement.'

https://unihair24.github.io/

http://export.arxiv.org/abs/2409.16863

TalkinNeRF: Animatable Neural Fields for Full-Body Talking Humans

It has been a while since the NeRF research strand has produced a candidate for human avatars. Gaussian Splatting has outstripped this technology in terms of qualitative results lately, particularly since GSplat offers explicit coordinates to aim at, whereas NeRF uses implicit coordinates that usually have to be inferred by a secondary system (such as FLAME or SMPL).

Anyway, take a look at the YouTube video below and see what you think. I’m not convinced.

'[A] novel framework that learns a dynamic neural radiance field (NeRF) for full-body talking humans from monocular videos. Prior work represents only the body pose or the face. However, humans communicate with their full body, combining body pose, hand gestures, as well as facial expressions. In this work, we propose TalkinNeRF, a unified NeRF-based network that represents the holistic 4D human motion. Given a monocular video of a subject, we learn corresponding modules for the body, face, and hands, that are combined together to generate the final result'

http://export.arxiv.org/abs/2409.16666

https://aggelinacha.github.io/TalkinNeRF/

Fine Tuning Text-to-Image Diffusion Models for Correcting Anomalous Images

Stable Diffusion V3 proved notorious for its inability to generate a plausible image of a woman lying on grass. As far as is known, this is because it cut the trained model’s connections between many human-based images and their semantic descriptions – presumably to impede the use of SD3 for porn or celebrity imitation (as it transpires, LoRA training and fine-tuning can remedy this).

This new paper directly addresses the issue - and I do mean directly, in that the solution arrived at appears to be on a ‘per case’ basis, and is intended to demonstrate the principle of addressing SD3’s defects.

‘Since the advent of GANs and VAEs, image generation models have continuously evolved, opening up various real-world applications with the introduction of Stable Diffusion and DALL-E models. These text-to-image models can generate high-quality images for fields such as art, design, and advertising. However, they often produce aberrant images for certain prompts. This study proposes a method to mitigate such issues by fine-tuning the Stable Diffusion 3 model using the DreamBooth technique. Experimental results targeting the prompt "lying on the grass/street" demonstrate that the fine-tuned model shows improved performance in visual evaluation and metrics such as Structural Similarity Index (SSIM), Peak Signal-to-Noise Ratio (PSNR), and Frechet Inception Distance (FID).’

http://export.arxiv.org/abs/2409.16174

https://github.com/hyoo14/Finetuned-SD3-Correcting-Anomalous-Images

Gaussian Déjà-vu: Creating Controllable 3D Gaussian Head-Avatars with Enhanced Generalization and Personalization Abilities

This new take on Gaussian head avatars comes, at least at the time of writing, with no accompanying project page or video, but may be worth a look, not least for its expression control features.

'[The system] first obtains a generalized model of the head avatar and then personalizes the result. The generalized model is trained on large 2D (synthetic and real) image datasets. This model provides a well-initialized 3D Gaussian head that is further refined using a monocular video to achieve the personalized head avatar. For personalizing, we propose learnable expression-aware rectification blendmaps to correct the initial 3D Gaussians, ensuring rapid convergence without the reliance on neural networks'

http://export.arxiv.org/abs/2409.16147

Other papers of interest

Fine-Tuning is Fine, if Calibrated

http://export.arxiv.org/abs/2409.16223

Revealing an Unattractivity Bias in Mental Reconstruction of Occluded Faces using Generative Image Models

http://export.arxiv.org/abs/2409.15443

Analysis of Human Perception in Distinguishing Real and AI-Generated Faces: An Eye-Tracking Based Study

http://export.arxiv.org/abs/2409.15498

Training Data Attribution: Was Your Model Secretly Trained On Data Created By Mine?

http://export.arxiv.org/abs/2409.15781

Zero-Shot Detection of AI-Generated Images

http://export.arxiv.org/abs/2409.15875

Seeing Faces in Things: A Model and Dataset for Pareidolia

https://mhamilton.net/facesinthings

http://export.arxiv.org/abs/2409.16143

My domain expertise is in AI image synthesis, and I’m the former science content head at Metaphysic.ai. I’m an occasional machine learning practitioner, and an educator. I’m also a native Brit, currently resident in Bucharest.

If you want to see more extensive examples of my writing on research, as well as some epic features (many of which hit big at Hacker News and garnered significant traffic), check out my portfolio website at https://martinanderson.ai.