Generative A-Eye #9 - 30th Sept,2024

A (more or less) daily newsletter featuring brief summaries of the latest papers related to AI-based human image synthesis, or to research related to this topic.

This is a catch-up after some unavoidable deadline-driven delays. Here’s the best of what I saw cropping up in the literature for the covered dates…

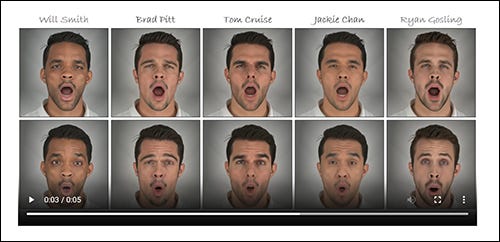

Stable Video Portraits

The results for this paper are really not that great in terms of accuracy to the target celebrities, but anything that can get temporal stability out of Stable Diffusion/LDMs may be worth picking apart for new approaches.

‘[A] novel hybrid 2D/3D generation method that outputs photorealistic videos of talking faces leveraging a large pre-trained text-to-image prior (2D), controlled via a 3DMM (3D). Specifically, we introduce a person-specific fine-tuning of a general 2D stable diffusion model which we lift to a video model by providing temporal 3DMM sequences as conditioning and by introducing a temporal denoising procedure. As an output, this model generates temporally smooth imagery of a person with 3DMM-based controls, i.e., a person-specific avatar. The facial appearance of this person-specific avatar can be edited and morphed to text-defined celebrities, without any fine-tuning at test time’

https://svp.is.tue.mpg.de/

http://export.arxiv.org/abs/2409.18083

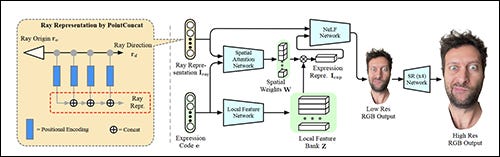

LightAvatar: Efficient Head Avatar as Dynamic Neural Light Field

A NeRF-based radiance avatar system that concedes [SPOILER] that it can’t quite compete with Gaussian Splat on a level playing field. Nonetheless, it’s interesting to see that NeRF-loving researchers are not giving up on human synthesis.

‘[One]major limitation of the NeRF-based avatars is the slow rendering speed due to the dense point sampling of NeRF, preventing them from broader utility on resource-constrained devices. We introduce LightAvatar, the first head avatar model based on neural light fields (NeLFs). LightAvatar renders an image from 3DMM parameters and a camera pose via a single network forward pass, without using mesh or volume rendering. The proposed approach, while being conceptually appealing, poses a significant challenge towards real-time efficiency and training stability. To resolve them, we introduce dedicated network designs to obtain proper representations for the NeLF model and maintain a low FLOPs budget’

http://export.arxiv.org/abs/2409.18057

https://github.com/MingSun-Tse/LightAvatar-TensorFlow

Behaviour4All: in-the-wild Facial Behaviour Analysis Toolkit

Facial Expression Recognition (FER) is one of the most nascent and understudied fields in human image synthesis, not least because the psychological literature that fuels it is contentious. As it stands, many neural expression facial modeling systems likely to exist in the VFX sector will be more like zBrush, i.e., ‘keep tinkering until it looks right’. So any paper that advances or at least considers the SOTA In this respect is welcome.

‘[A] comprehensive, open-source toolkit for in-the-wild facial behavior analysis, integrating Face Localization, Valence-Arousal Estimation, Basic Expression Recognition and Action Unit Detection, all within a single framework. Available in both CPU-only and GPU-accelerated versions, Behavior4All leverages 12 large-scale, in-the-wild datasets consisting of over 5 million images from diverse demographic groups. It introduces a novel framework that leverages distribution matching and label co-annotation to address tasks with non-overlapping annotations, encoding prior knowledge of their relatedness’

http://export.arxiv.org/abs/2409.17717

Disco4D: Disentangled 4D Human Generation and Animation from a Single Image

An interesting paper hampered by lack of close-ups, with most of the supplementary examples looking like something out of Second Life. Nonetheless, we’re not so overwhelmed with GSplat human synthesis papers that we can neglect a contribution to the field.

‘a novel Gaussian Splatting framework for 4D human generation and animation from a single image. Different from existing methods, Disco4D distinctively disentangles clothings (with Gaussian models) from the human body (with SMPL-X model), significantly enhancing the generation details and flexibility.’

http://export.arxiv.org/abs/2409.17280

https://disco-4d.github.io/

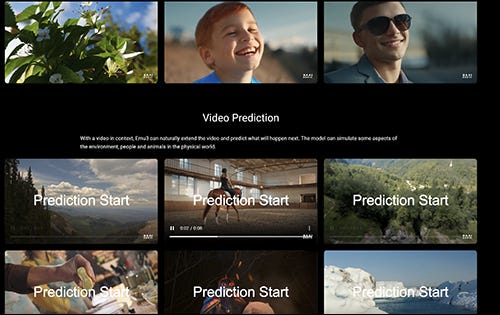

Emu3: Next-Token Prediction is All You Need

An interesting new architectural approach to video and image generation, but with results that sit in what I can only call ‘Uncanny Valley 2.0’, as defined by the Stable Diffusion community. The avalanche of videos on the project page will severely tax your computer (one user reported a system crash), which is becoming a nasty habit with releases of this nature.

‘[A] new suite of state-of-the-art multimodal models trained solely with next-token prediction. By tokenizing images, text, and videos into a discrete space, we train a single transformer from scratch on a mixture of multimodal sequences. Emu3 outperforms several well-established task-specific models in both generation and perception tasks, surpassing flagship models such as SDXL and LLaVA-1.6, while eliminating the need for diffusion or compositional architectures’

http://export.arxiv.org/abs/2409.18869

https://emu.baai.ac.cn/about (video-heavy page may be hard to load)

Other papers of interest

Space-time 2D Gaussian Splatting for Accurate Surface Reconstruction under Complex Dynamic Scenes

https://tb2-sy.github.io/st-2dgs/

http://export.arxiv.org/abs/2409.18852

Multi-hypotheses Conditioned Point Cloud Diffusion for 3D Human Reconstruction from Occluded Images

http://export.arxiv.org/abs/2409.18364

My domain expertise is in AI image synthesis, and I’m the former science content head at Metaphysic.ai. I’m an occasional machine learning practitioner, and an educator. I’m also a native Brit, currently resident in Bucharest.

If you want to see more extensive examples of my writing on research, as well as some epic features (many of which hit big at Hacker News and garnered significant traffic), check out my portfolio website at https://martinanderson.ai.