Generative A-Eye #6 - 23rd Sept,2024

A (more or less) daily newsletter featuring brief summaries of the latest papers related to AI-based human image synthesis, or to research related to this topic.

Before the day’s papers, just to mention that I wrote a short feature at unite.ai about the difficulty of making a complete movie with an AI system.

Why Can’t Generative Video Systems Make Complete Movies? (unite.ai)

I hear so many people saying ‘any minute now’, in regard to narrative continuity in video generation systems. However, we heard similar cries after the debut of GANs and NeRF, neither of which ever proved susceptible to true commercial instrumentalities and exploitation. Gaussian Splat, anyone?

Portrait Video Editing Empowered by Multimodal Generative Priors

This offering from the University of Science and Technology of China has a project page full of lively examples of video-to-video transformations. This is one of the first architectures that I’ve seen that incorporates Gaussian Splatting in an end-to-end system rather than depending outright on it.

Perhaps the most interesting thing about it is the dedicated expression/face module. Until this project, nearly all the human synthesis frameworks I’ve seen have focused either on the body at the relative expense of the face, or vice versa. It always seemed to me that facial attention would end up as a sub-module of the new generation of full-body systems.

That said, the examples supplied at the video-strewn project page vary greatly in quality and levels of daftness.

‘PortraitGen, a powerful portrait video editing method that achieves consistent and expressive stylization with multimodal prompts. Traditional portrait video editing methods often struggle with 3D and temporal consistency, and typically lack in rendering quality and efficiency. To address these issues, we lift the portrait video frames to a unified dynamic 3D Gaussian field, which ensures structural and temporal coherence across frames. Furthermore, we design a novel Neural Gaussian Texture mechanism that not only enables sophisticated style editing but also achieves rendering speed over 100FPS’

http://export.arxiv.org/abs/2409.13591

https://ustc3dv.github.io/PortraitGen/

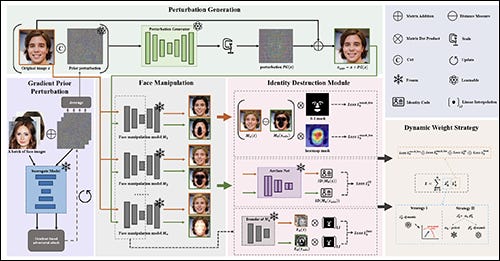

ID-Guard: A Universal Framework for Combating Facial Manipulation via Breaking Identification

This is not quite as convincing as the shake-based deepfake detection that I covered in a previous newsletter. Most have concentrated on sub-signals in frequency analysis, and other approaches that have been in circulation since 2017 – and then, mostly for detection of deepfaked still images. Nonetheless, this paper might be worth a deeper look.

‘[This] framework requires only a single forward pass of an encoder-decoder network to generate a cross-model universal adversarial perturbation corresponding to a specific facial image. To ensure anonymity in manipulated facial images, a novel Identity Destruction Module (IDM) is introduced to destroy the identifiable information in forged faces targetedly. Additionally, we optimize the perturbations produced by considering the disruption towards different facial manipulations as a multi-task learning problem and design a dynamic weights strategy to improve cross-model performance.’

_________________________

My domain expertise is in AI image synthesis, and I’m the former science content head at Metaphysic.ai. I’m an AI developer, current machine learning practitioner, and an educator. I’m also a native Brit, currently resident in Bucharest, but possibly interested in relocation.

If you want to see more extensive examples of my writing on research, as well as some epic features, many of which hit big at Hacker News and garnered significant traffic, check out my portfolio website at https://martinanderson.ai.