Real-Time Deepfake Streaming Is Apparently Coming To World's Most-Used DeepFake Software

DeepFaceLive, a streaming implementation for models developed for DeepFaceLab, brings together the power of extended machine learning training with the immediacy of a real-time stream.

The creator of DeepFaceLab, the most-used open source software for creating deepfakes, is developing a streaming implementation for deepfakes, apparently capable of rendering deepfakes on a real-time basis from models trained at length in the open source project DeepFaceLab1.

In a Discord group for rival deepfake software FaceLab, the otherwise anonymous developer Iperov shared a video demonstration of the system:

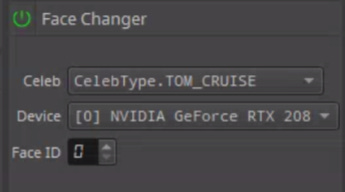

The demonstration uses pre-fake footage of Tom Cruise impersonator Miles Fisher, who, together with Belgian VFX artist and deepfaker Christopher Ume. made headlines in late winter in a series of sensational videos created with the full-fledged DeepFaceLab framework, and applies a deepfake model from a drop-down menu:

The Cruise model, which will have taken somewhere between 2-7 days to train, has been made available as an .onnx2 file (complete with source code) on GitHub. It is currently the only model in the category CELEB_MODEL.

Presumably this drop-down list will eventually be populated by the end-user, who can then apply arduously-trained models to live streaming environments, such as Zoom and Skype, and (given the porn-centric origins3 of DeepFaceLab), to ‘cam’ scenarios.

The drop-down menu for the graphics card that is running DeepFaceLive is truncated. The card seems likely, based on what is visible, to be a GeForce RTX 2080 or the higher-VRAM 2080 ti.

The interface shows a ‘target delay’ of 2 seconds, which would need to be improved in order to perform well in live chat streaming, though common issues of latency in VOIP networks might cover this up, to an extent.

A rudimentary GitHub4 has been created for DeepFaceLive, which, presumably, will make the system available on an open source basis.

In the Discord messages, Iperov describes the ‘Hard months to create high performance architecture’ for DeepFaceLive, which runs on PyTorch.

Solution: Just Ask The ‘Suspect’ Zoom Viewer To Look Left, Or Look Up

As anyone who read my recent piece about the Limited Future Of Deepfakes will know, I’m not a subscriber to apocalyptic headlines about the potential cultural chaos that might ensue in the near future from deepfake’s use in fraud and/or political manipulation (well, actually I do believe this will happen, but much later, and through ‘pure’ image synthesis, and not the shambolic ministrations of DeepFaceLab).

This live streaming implementation of a DeepFaceLab model will inherit the traditional weaknesses of any deepfake output, including the inability of a model to sustain believable output at oblique angles.

Therefore, if you suspect someone has plugged a system like this into your video streaming call, just ask them to look up at the ceiling, or turn their head hard to one side, and note that the facial lineaments will suddenly become ‘sizzling’ and abstract, and more likely completely reveal the underlying face of the caller.

This reliable ‘tell’ can be redressed to a certain extent by including a high number of ‘oblique’ images in the contributing dataset during training, but this leaves less focus in the latent space for more common facial poses, with increased realism in oblique angles paid for by diminished authenticity in face-on views.

These limitations come from the Facial Alignment Network that captures facial poses in DFL, and does not capture them well at extreme angles; from the great difficulty in obtaining a high number of ‘rare’ angles from any possible target subject (particularly sharply-rendered angles, without motion blur); and from the unwillingness of deepfake practitioners to redress this fault at the expense of the 90% of viewpoints that will work effectively, since it is easier to choose ‘deepfake-friendly’ footage that mostly features ‘face to camera angles than to really solve this problem systematically.

Nonetheless, DeepFaceLive offers a far more powerful approach to real-time deepfake footage than more rudimentary recent efforts such as Avatarify.

https://github.com/iperov/DeepFaceLab

Open Neural Network Exchange format - https://onnx.ai/

mrdeepfakes[dot]com (the online community for pornographic celebrity deepfake development, centered on DeepFaceLab - definitely NSFW)

Archive version: https://archive.is/AIG1B